How Shazam Works - An explanation in Python

— Python, Data Science — 9 min read

Have you ever wondered how Shazam works? How it is able to so quickly and efficiently identify songs playing, even on poor mobile phone microphones in crowded locations? Surely with all the imperfections in the recording, you wouldn't be able to match snippets of recordings like you might when searching for lyrics? Fortunately, Shazam is not as magical as it first may seem, requiring only a preliminary understanding of frequency domain analysis.

This article will cover developing a simple prototype of Shazam in Python - building a database of the Billboard Hot 100 (of January 2021), and being able to match iPhone recordings to the original songs, even in the presence of background noise and compression.

Note: While this article covers the basics of Fourier analysis, it does not attempt to be entirely mathematically rigourous - but it conveys enough to understand Shazam. Similarly, this won't be a Python tutorial - but any particularly interesting functions or tricks will be explained.

Getting Set Up

For this article, I'm using Python3 along with Numpy, SciPy, Matplotlib and a couple of other libraries.

The most important things you're going to need are songs to form your database. I happen to have the files for the Billboard Hottest 100, but any set of songs will do. These files, typically MP3's, are most easily worked with in Python when converted into .wav files. There are variety of ways to convert a folder of mp3's to single channel wav files, but I used the following command using ripgrep and ffmpeg:

1ls *.mp3 | rg -o "(.*?)\.mp3" -r '$1' | xargs -n 1 -I '{}' -d '\n' ffmpeg -i '{}.mp3' -ac 1 'converted/{}.wavCode for this article is available on GitHub

Characterising Sound

The first step in building Shazam is to create fingerprints of tracks - identfying some kind of feature of a song that makes it distinct from other songs. Additionally, this kind of fingerpint should be immune to corruption: much like the human ear's ability to filter out background noise and compression artifacts, the fingerprint should be recognisable even in the poorest of conditions.

While Shazam today uses smart phone recordings transmitted over the internet, Shazam launched 2002 and used phone calls with the most horrific quality - and still worked!

The key to fingerprinting is to look at music in terms of the frequencies of sound that combine to make it up, rather than as a string of notes or lyrics over time. This practice is called Fourier analysis, a field of mathematics pioneered in the 18th and 19th centuries, particularly by Joseph Fourier. The primary technique is the Fourier transform, which transforms a signal, such as a song, into the constituent frequencies that comprise it.

Without using Fourier analysis, it is extremely difficult to view a sound wave over time and identify any distinguising characteristics about the sounds contained in it.

For example, here is a view of a sound signal when viewed over time:

1import numpy as np2import matplotlib.pyplot as plt3plt.rcParams['figure.figsize'] = [8, 6]4plt.rcParams['figure.dpi'] = 140 # 200 e.g. is really fine, but slower5from scipy import fft, signal6import scipy7from scipy.io.wavfile import read89# Read the input WAV files10# Fs is the sampling frequency of the file11Fs, song = read("data/001. 24kgoldn - Mood (feat. iann dior).wav")1213time_to_plot = np.arange(Fs * 1, Fs * 1.3, dtype=int)14plt.plot(time_to_plot, song[time_to_plot])15plt.title("Sound Signal Over Time")16plt.xlabel("Time Index")17plt.ylabel("Magnitude");This signal suffers from several problems: there is a lot of high frequency noise (common due to compression and microphone interference) which makes idenfying important peaks or other features difficult; and any background noise will be indistinguishable from the main song.

The Fourier Transform counters most of these difficulties. The Fourier transform maps the relative 'amount' of a specific frequency compared to the other ones. The simplest analogy of what Fourier transform does is to think of the spectrogram that shows up on your hifi or on Youtube videos of music songs.

Let's start with a contrived example, courtesy of the SciPy documentation.

1import matplotlib.pyplot as plt2from scipy.fft import fft, fftfreq3# Number of sample points4N = 6005# sample spacing6T = 1.0 / 800.07x = np.linspace(0.0, N*T, N, endpoint=False)89# Create a signal comprised of 5 Hz wave and an 10 Hz wave10y = np.sin(5.0 * 2.0*np.pi*x) + 0.75*np.sin(10.0 * 2.0*np.pi*x)11yf = fft(y)12xf = fftfreq(N, T)[:N//2]1314plt.subplot(1, 2, 1)15plt.plot(x, y)16plt.xlabel("Time (s)")17plt.subplot(1, 2, 2)18plt.plot(xf, 2.0/N * np.abs(yf[0:N//2]))19plt.grid()20plt.xlabel("Frequency (Hz)")21plt.xlim(0, 60)22plt.show()This example clearly illustrates the spikes at 5 and 10 Hz, demonstrating that there is a large amount of signal 'power' at these two frequencies.

The Fourier transform is extremely powerful when combatting noise and other distortions. See a modified example below:

1y = np.sin(5.0 * 2.0*np.pi*x) + 0.75*np.sin(10.0 * 2.0*np.pi*x)23# Add Gaussian Noise to one of the signals4y_corrupted = y + np.random.normal(0, 2.5, len(y))56# Plot and perform the same FFT as before78plt.subplot(2, 2, 1)9plt.plot(x, y)10plt.xlabel("Time (s)")11plt.grid()12plt.subplot(2, 2, 3)13plt.plot(x, y_corrupted)14plt.xlabel("Time (s)")15plt.grid()1617yf = fft(y)18xf = fftfreq(N, T)[:N//2]19plt.subplot(2, 2, 2)20plt.plot(xf, 2.0/N * np.abs(yf[0:N//2]))21plt.xlim(0, 60)22plt.xlabel("Frequency (Hz)")23plt.grid()2425yf = fft(y_corrupted)26xf = fftfreq(N, T)[:N//2]27plt.subplot(2, 2, 4)28plt.plot(xf, 2.0/N * np.abs(yf[0:N//2]))29plt.xlim(0, 60)30plt.xlabel("Frequency (Hz)")31plt.grid()When viewed in time (on the left), the distorted signal is mostly unrecognisable. While the general shape has not been completely lost, the original peaks and troughs have been completely overruled. However, when viewed in the frequency domain (after performing the Fourier transform), the two peaks for the 5 and 10 Hz signals are still present, even with the large amount of noise.

We can apply this exact same technique to our song from before, 24kgolden's Mood.

1N = len(song)2fft = scipy.fft.fft(song)3transform_y = 2.0 / N * np.abs(fft[0:N//2])4transform_x = scipy.fft.fftfreq(N, 1 / Fs)[:N//2]5plt.plot(transform_x, transform_y)6plt.xlabel("Frequency (Hz)");This spectrum is a bit harder to decipher at first glance, especially when viewing the entire frequency range (the Fourier transform can determine frequencies from 0 to the Nyquist Frequency, half the sampling rate). However, when viewing only from 0 to 2000 Hz, it becomes clear that there are several frequencies of importance.

1plt.plot(transform_x, transform_y)2plt.xlim(0, 2000);We can now use the standard SciPy peak finding methods to find peaks in the spectrum. However, the very noisy makeup of the spectrum means that there are too many peaks to identify regions of actual interest that will be useful in developing fingerprints of the song.

Fortunately, SciPy allows us to constrain our search for only the most important peaks. From my investigations, music tends to have it's strongest peaks in the lower frequency range (< 500Hz), so a peak finding method that only considers the most prominent peaks (peaks with the shallowest 'valley' on either side) will have it's peaks concentrated in the bass frequencies. By specifying a minimum distance and then finding the ten most prominent peaks after that allows finding representative peaks of the sample.

Contrast below the mess of peaks identified by default, with a more constrained version:

1all_peaks, props = signal.find_peaks(transform_y)23peaks, props = signal.find_peaks(transform_y, prominence=0, distance=10000)4n_peaks = 1556# Get the n_peaks largest peaks from the prominences7# This is an argpartition8# Useful explanation: https://kanoki.org/2020/01/14/find-k-smallest-and-largest-values-and-its-indices-in-a-numpy-array/9largest_peaks_indices = np.argpartition(props["prominences"], -n_peaks)[-n_peaks:]10largest_peaks = peaks[largest_peaks_indices]1112plt.plot(transform_x, transform_y, label="Spectrum")13plt.scatter(transform_x[largest_peaks], transform_y[largest_peaks], color="r", zorder=10, label="Constrained Peaks")14plt.xlim(0, 3000)1516plt.show()These peak frequencies are an ideal way to characterise the sound being analysed.

This method currently analyses the spectrum of the entire song as a whole. However, songs tend to change their sound throughout - the chorus and final verse might have completely different spectrums. The short snippet being recorded on a user's phone is unlikely to match the frequency content of the entire song.

To get around this, we can perform the Fourier Transform on short snippets of the song, such as half a second in length. For each snippet, we can again identify the key frequencies. Each snippet's frequencies identifies how the song changes over time - in that way, no matter which part of the song the user uses as a sample, we can match it to a corresponding snippet.

This can easily be acheived by using the SciPy implementation of the Short Time Fourier Transform, which applies the Fourier transform in windows (with slight overlap between each one).

1# Some parameters2window_length_seconds = 33window_length_samples = int(window_length_seconds * Fs)4window_length_samples += window_length_samples % 256# Perform a short time fourier transform7# frequencies and times are references for plotting/analysis later8# the stft is a NxM matrix9frequencies, times, stft = signal.stft(10 song, Fs, nperseg=window_length_samples,11 nfft=window_length_samples, return_onesided=True12)1314stft.shape1(66151, 95)We can then perform the same peak finding as before, and plot the results as a graph of time across the X axis and the frequency of the peak on the Y axis. This forms a constellation of points which characterise the song.

1constellation_map = []23for time_idx, window in enumerate(stft.T):4 # Spectrum is by default complex. 5 # We want real values only6 spectrum = abs(window)7 # Find peaks - these correspond to interesting features8 # Note the distance - want an even spread across the spectrum9 peaks, props = signal.find_peaks(spectrum, prominence=0, distance=200)1011 # Only want the most prominent peaks12 # With a maximum of 5 per time slice13 n_peaks = 514 # Get the n_peaks largest peaks from the prominences15 # This is an argpartition16 # Useful explanation: https://kanoki.org/2020/01/14/find-k-smallest-and-largest-values-and-its-indices-in-a-numpy-array/17 largest_peaks = np.argpartition(props["prominences"], -n_peaks)[-n_peaks:]18 for peak in peaks[largest_peaks]:19 frequency = frequencies[peak]20 constellation_map.append([time_idx, frequency])2122# Transform [(x, y), ...] into ([x1, x2...], [y1, y2...]) for plotting using zip23plt.scatter(*zip(*constellation_map));See how there are different points in time with different important frequencies? Early on, the higher frequencies are dominant (the song begins with a strumming guitar only), before the chorus and other areas are more bass dominant. There are still other regions which are devoid of certain frequencies, when different instruments are cut in and out.

The aim of this constellation is to be entirely unique, with no other song having a constellation quite like it!

In the above code, I have used some parameters which produce less points on the constellation to make the plotting sensible (a longer window with less number of peaks per window). The below method is with the tweaked parameters, and I recommend you use this as the denser constellation greatly aids in identifying songs:

1def create_constellation(audio, Fs):2 # Parameters3 window_length_seconds = 0.54 window_length_samples = int(window_length_seconds * Fs)5 window_length_samples += window_length_samples % 26 num_peaks = 1578 # Pad the song to divide evenly into windows9 amount_to_pad = window_length_samples - audio.size % window_length_samples1011 song_input = np.pad(audio, (0, amount_to_pad))1213 # Perform a short time fourier transform14 frequencies, times, stft = signal.stft(15 song_input, Fs, nperseg=window_length_samples, nfft=window_length_samples, return_onesided=True16 )1718 constellation_map = []1920 for time_idx, window in enumerate(stft.T):21 # Spectrum is by default complex. 22 # We want real values only23 spectrum = abs(window)24 # Find peaks - these correspond to interesting features25 # Note the distance - want an even spread across the spectrum26 peaks, props = signal.find_peaks(spectrum, prominence=0, distance=200)2728 # Only want the most prominent peaks29 # With a maximum of 15 per time slice30 n_peaks = min(num_peaks, len(peaks))31 # Get the n_peaks largest peaks from the prominences32 # This is an argpartition33 # Useful explanation: https://kanoki.org/2020/01/14/find-k-smallest-and-largest-values-and-its-indices-in-a-numpy-array/34 largest_peaks = np.argpartition(props["prominences"], -n_peaks)[-n_peaks:]35 for peak in peaks[largest_peaks]:36 frequency = frequencies[peak]37 constellation_map.append([time_idx, frequency])3839 return constellation_mapCombinatorial Hashing

The constellations produced above are great visual representations of songs - but how might we match a snippet of a recording to another song? As hard as we might try, it's unlikely that a phone recording will have exactly all of the same frequencies present as in the original master. Additionally, many songs use similar frequency ranges at different times, meaning that with only a subset of frequencies it can be difficult to exactly match a particular frequency in the phone recording to the exact song it orignally came from.

The solution taken in Shazam is to dramatically increase the problem space. The points in the constellation map are combinatorially associated - each point is paired with several other points to form pairs of frequencies, stored with the difference in time between them.

If the two frequencies are then converted to 10-bit integers (from 0 to 1024 by placing the exact frequency into a bin) and the time difference between them stored as a 12 bit integer, the pair of points produces a single 32-bit integer hash.

This produces many times more candidate fingerprints for a song than simply with the constellations, and it is also extremely efficient for the computer to match hashes in the cell phone recording with hashes stored in the song database.

The actual combinatorial association is in fact simple to create: for a given point in the constellation, search for a set of points ahead in time up to some limit (say 10 sampling windows). These form the fingerprint pairs.

We can use that hash and store the track ID it is associated with, and the time that that hash occurred at (important for next part).

1constellation_map = create_constellation(song, Fs)23def create_hashes(constellation_map, song_id=None):4 hashes = {}5 # Use this for binning - 23_000 is slighlty higher than the maximum6 # frequency that can be stored in the .wav files, 22.05 kHz7 upper_frequency = 23_000 8 frequency_bits = 10910 # Iterate the constellation11 for idx, (time, freq) in enumerate(constellation_map):12 # Iterate the next 100 pairs to produce the combinatorial hashes13 # When we produced the constellation before, it was sorted by time already14 # So this finds the next n points in time (though they might occur at the same time)15 for other_time, other_freq in constellation_map[idx : idx + 100]: 16 diff = other_time - time17 # If the time difference between the pairs is too small or large18 # ignore this set of pairs19 if diff <= 1 or diff > 10:20 continue2122 # Place the frequencies (in Hz) into a 1024 bins23 freq_binned = freq / upper_frequency * (2 ** frequency_bits)24 other_freq_binned = other_freq / upper_frequency * (2 ** frequency_bits)2526 # Produce a 32 bit hash27 # Use bit shifting to move the bits to the correct location28 hash = int(freq_binned) | (int(other_freq_binned) << 10) | (int(diff) << 20)29 hashes[hash] = (time, song_id)30 return hashes3132# Quickly investigate some of the hashes produced33hashes = create_hashes(constellation_map, 0)34for i, (hash, (time, _)) in enumerate(hashes.items()):35 if i > 10: 36 break37 print(f"Hash {hash} occurred at {time}")1Hash 2494868 occurred at 02Hash 2475412 occurred at 03Hash 2420116 occurred at 04Hash 2378132 occurred at 05Hash 2398612 occurred at 2976Hash 2357652 occurred at 5037Hash 2337172 occurred at 4838Hash 2311572 occurred at 5379Hash 2279828 occurred at 44610Hash 2240916 occurred at 50211Hash 2261396 occurred at 0Generating a Simple Hash Database

Next, we can generate a simple database of all hashes across our collection of 100 songs. There doubtless many ways to accomplish this, including dealing with indexing and persistence. For this article, however, I will keep it simple using a hashmap saved with Pickle.

The above technique produces hashes which are not guaranteed to be unique. So we just need to ensure that each hash has a list of (time, song) pairs which we can later use to score the songs to find a match.

1import glob2from typing import List, Dict, Tuple3from tqdm import tqdm4import pickle56songs = glob.glob('data/*.wav')78song_name_index = {}9database: Dict[int, List[Tuple[int, int]]] = {}1011# Go through each song, using where they are alphabetically as an id12for index, filename in enumerate(tqdm(sorted(songs))):13 song_name_index[index] = filename14 # Read the song, create a constellation and hashes15 Fs, audio_input = read(filename)16 constellation = create_constellation(audio_input, Fs)17 hashes = create_hashes(constellation, index)1819 # For each hash, append it to the list for this hash20 for hash, time_index_pair in hashes.items():21 if hash not in database:22 database[hash] = []23 database[hash].append(time_index_pair)24# Dump the database and list of songs as pickles25with open("database.pickle", 'wb') as db:26 pickle.dump(database, db, pickle.HIGHEST_PROTOCOL)27with open("song_index.pickle", 'wb') as songs:28 pickle.dump(song_name_index, songs, pickle.HIGHEST_PROTOCOL)1100%|██████████| 100/100 [03:15<00:00, 1.96s/it]Finding Matches For Recordings

Although the hashes have a strong amount of entropy as 32 bit integers, they are not guaranteed to be unique across all songs - therefore, we must have a scoring strategy to differentiate which song a particular recording matches.

The simplest method is to create the hashes for the phone recording, and see which song in the database has the most matching hashes. However, as we see in the snippet below, this doesn't greatly differentiate the songs:

1# Load the database2database = pickle.load(open('database.pickle', 'rb'))3song_name_index = pickle.load(open("song_index.pickle", "rb"))1# Load a short recording with some background noise2Fs, audio_input = read("recording2.wav")3# Create the constellation and hashes4constellation = create_constellation(audio_input, Fs)5hashes = create_hashes(constellation, None)67# For each hash in the song, check if there's a match in the database8# There could be multiple matching tracks, so for each match:9# Incrememnt a counter for that song ID by one10matches_per_song = {}11for hash, (sample_time, _) in hashes.items():12 if hash in database:13 matching_occurences = database[hash]14 for source_time, song_id in matching_occurences:15 if song_id not in matches_per_song:16 matches_per_song[song_id] = 017 matches_per_song[song_id] += 11819for song_id, num_matches in list(sorted(matches_per_song.items(), key=lambda x: x[1], reverse=True))[:10]:20 print(f"Song: {song_name_index[song_id]} - Matches: {num_matches}")1Song: data/070. All Time Low - Monsters (feat. Demi Lovato and blackbear).wav - Matches: 221922Song: data/072. Luke Bryan - Down To One.wav - Matches: 216953Song: data/001. 24kgoldn - Mood (feat. iann dior).wav - Matches: 214094Song: data/051. Luke Combs - Forever After All.wav - Matches: 212925Song: data/094. Eric Church - Hell Of A View.wav - Matches: 210576Song: data/008. Drake - Laugh Now Cry Later (feat. Lil Durk).wav - Matches: 204817Song: data/050. Russell Dickerson - Love You Like I Used To.wav - Matches: 204668Song: data/064. Darius Rucker - Beers And Sunshine.wav - Matches: 203739Song: data/056. Yung Bleu - You’re Mines Still (feat. Drake).wav - Matches: 2016010Song: data/048. Niko Moon - GOOD TIME.wav - Matches: 20083The snippet is in fact taken from 24kgoldn's Mood. Therefore, this naive scoring technique would have given the incorrect song.

A superior method is to exploit one thing we know about the hashes: we know the order that they should occur, and we know precisely the difference in time between them. Although phone compression and poor microphones might slighly distort the frequency picked up, they would not alter the timing of these hashes drastically.

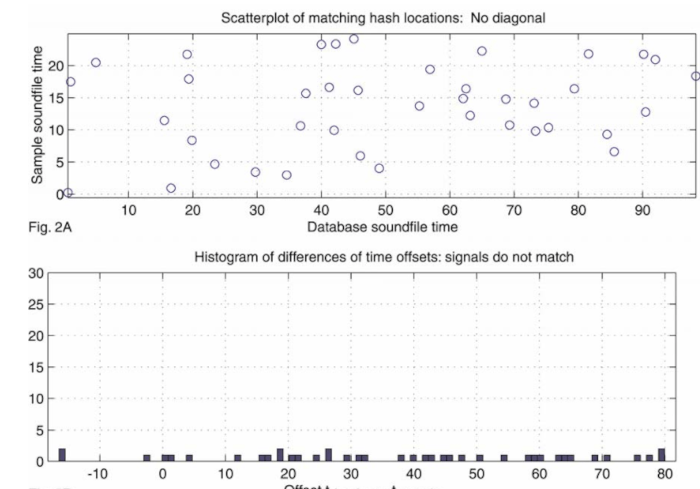

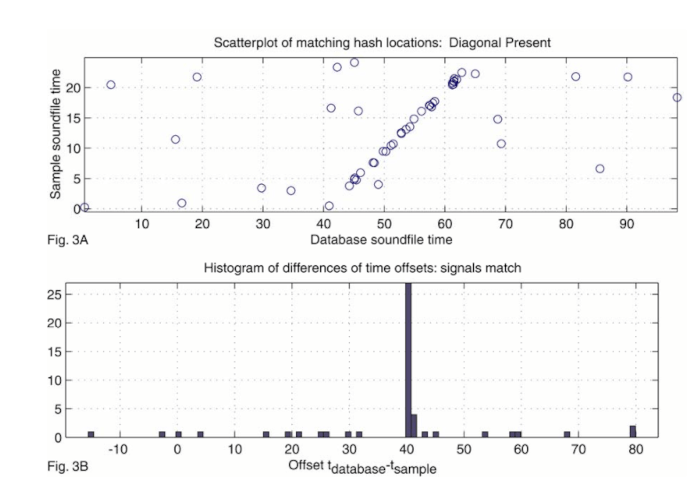

The matches in hashes should draw a straight line through a scatter plot of the matching hashes over time. Compare the two images below (from the original Shazam paper):

Finding a straight line between matching points can be achieved in a variety of methods, such as a Hough transform. However, we know that the slope of the straight line should be exactly 1.0 - the two recordings should be playing at the same speed. Furthermore, the difference in time between the time at which a point occurs in the original database recording and the user's recording should be fixed for the matches along the line.

We can calculate a histogram of the number of matches per offset. The height of the tallest peak in the histogram is the best score for that match. Songs with a lot of similar frequencies, but played at different times, will have a flat histogram with no clear tall peaks. On the other hand, the exact song will have a very clear peak which indicates a perfect match.

We can modify our original scoring algorithm to keep track of the specific hash which matched between the database and the user recording, as well as the time in the database that matched occured and the time it occured in the user sample:

1# Load a short recording with some background noise2Fs, audio_input = read("recording2.wav")3# Create the constellation and hashes4constellation = create_constellation(audio_input, Fs)5hashes = create_hashes(constellation, None)67# For each hash in the song, check if there's a match in the database8# There could be multiple matches, so for each match:9# Append all of them to a hashmap based on the song id along with the time10# the hash occurs in the sample and at the source11# In the end, matches_per_song is key'd by song ID with values being12# lists of hashes, the 13matches_per_song = {}14for hash, (sample_time, _) in hashes.items():15 if hash in database:16 matching_occurences = database[hash]17 for source_time, song_id in matching_occurences:18 if song_id not in matches_per_song:19 matches_per_song[song_id] = []20 matches_per_song[song_id].append((hash, sample_time, source_time))We can then generate the histogram for each track by going combinatorially going through each hash match, and noting the offset in time between them. We keep a counter in a hashmap for each offset. Let's just perform this exercise for two songs for now: the actual match, and an incorrect song

1scores = {}2song_ids = [69, 0] # Song ID=0 is the true match3for song_id in song_ids:4 song_name = song_name_index[song_id].split('-')[1]56 matches = matches_per_song[song_id]7 print(f"Total matches for {song_name}: {len(matches)}")8 song_scores_by_offset = {}9 for hash, sample_time, source_time in matches:10 delta = source_time - sample_time11 if delta not in song_scores_by_offset:12 song_scores_by_offset[delta] = 013 song_scores_by_offset[delta] += 11415 # Produce a histogram16 # For clarity's sake, only plot the 100 largest offsets17 high_scores = list(sorted(song_scores_by_offset.items(), key=lambda x: x[1], reverse=True))[:100]18 plt.figure()19 plt.bar(*zip(*high_scores))20 plt.title(song_name)21 plt.ylim((0, 900))1Total matches for Monsters (feat. Demi Lovato and blackbear).wav: 221922Total matches for Mood (feat. iann dior).wav: 21409See how the total matches for Monsters is actually higher than the true match, Mood? However, there are significant spikes at a time indices of ~240 and ~430 for Mood, however, there is no spike even close in height for Monsters!

This clearly indicates that Mood is a much better match than Monsters. This method to find a match can be extended to all songs, with each song being assigned a score based on the largest peak in it's histogram.

1scores = {}2for song_index, matches in matches_per_song.items():3 song_scores_by_offset = {}4 for hash, sample_time, source_time in matches:5 delta = source_time - sample_time6 if delta not in song_scores_by_offset:7 song_scores_by_offset[delta] = 08 song_scores_by_offset[delta] += 1910 max = (0, 0)11 for offset, score in song_scores_by_offset.items():12 if score > max[1]:13 max = (offset, score)14 15 scores[song_index] = max1617for song_index, score in list(sorted(scores.items(), key=lambda x: x[1][1], reverse=True))[:5]:18 print(f"{song_name_index[song_index]}: Score of {score[1]} at {score[0]}")1data/001. 24kgoldn - Mood (feat. iann dior).wav: Score of 912 at 2392data/068. Thomas Rhett - What’s Your Country Song.wav: Score of 201 at 6113data/081. Morgan Wallen - Somebody’s Problem.wav: Score of 191 at 5164data/064. Darius Rucker - Beers And Sunshine.wav: Score of 181 at 6425data/056. Yung Bleu - You’re Mines Still (feat. Drake).wav: Score of 165 at 789The final total method to find a match is below:

1def score_hashes_against_database(hashes):2 matches_per_song = {}3 for hash, (sample_time, _) in hashes.items():4 if hash in database:5 matching_occurences = database[hash]6 for source_time, song_index in matching_occurences:7 if song_index not in matches_per_song:8 matches_per_song[song_index] = []9 matches_per_song[song_index].append((hash, sample_time, source_time))10 1112 # %%13 scores = {}14 for song_index, matches in matches_per_song.items():15 song_scores_by_offset = {}16 for hash, sample_time, source_time in matches:17 delta = source_time - sample_time18 if delta not in song_scores_by_offset:19 song_scores_by_offset[delta] = 020 song_scores_by_offset[delta] += 12122 max = (0, 0)23 for offset, score in song_scores_by_offset.items():24 if score > max[1]:25 max = (offset, score)26 27 scores[song_index] = max2829 # Sort the scores for the user30 scores = list(sorted(scores.items(), key=lambda x: x[1][1], reverse=True)) 31 32 return scoresWe can test our new scoring with two audio recordings. The first is a relatively pristine recording taken with my phone, the second includes background conversation from a news broadcast. Unsurprisingly, both are matched equally well!

Recording 1:

Recording 2:

1def print_top_five(file_name):2 # Load a short recording with some background noise3 Fs, audio_input = read(file_name)4 # Create the constellation and hashes5 constellation = create_constellation(audio_input, Fs)6 hashes = create_hashes(constellation, None)78 scores = score_hashes_against_database(hashes)[:5]9 for song_id, score in scores:10 print(f"{song_name_index[song_id]}: Score of {score[1]} at {score[0]}")1112print("Recording 1:")13print_top_five("recording1.wav")1415print("\n\nRecording 2:")16print_top_five("recording2.wav")1Recording 1:2data/001. 24kgoldn - Mood (feat. iann dior).wav: Score of 869 at 1393data/048. Niko Moon - GOOD TIME.wav: Score of 170 at 7784data/056. Yung Bleu - You’re Mines Still (feat. Drake).wav: Score of 168 at 8405data/050. Russell Dickerson - Love You Like I Used To.wav: Score of 168 at 6466data/094. Eric Church - Hell Of A View.wav: Score of 166 at 609789Recording 2:10data/001. 24kgoldn - Mood (feat. iann dior).wav: Score of 912 at 23911data/068. Thomas Rhett - What’s Your Country Song.wav: Score of 201 at 61112data/081. Morgan Wallen - Somebody’s Problem.wav: Score of 191 at 51613data/064. Darius Rucker - Beers And Sunshine.wav: Score of 181 at 64214data/056. Yung Bleu - You’re Mines Still (feat. Drake).wav: Score of 165 at 789Conclusion

This article has covered the fundamentals of Shazam from start to finish. This included an introduction to Fourier analysis, the process of analysing a signal in terms of the frequencies that compose it.

There are countless improvements that I'm sure could be made to improve the time and storage requirements at various steps, and doubtless improvements could be made to the Fourier analysis to work better in a wider variety of environments - but this implementation still fundamentally works.

Modern version of Shazam or Google's own version have no doubt evolved since 2002 - in fact, Google's version can identify a song simply from a person humming the tune, which this implementation absolutely cannot do. An intersting observation users of Shazam made was that the algorithm is sensitive to slight changes in timing or pitch - a band performing their song live would often not produce a match to their song in the database. This can easily be used to out artists who just lip sync to a recording, as early Shazam would produce an exact match! Modern machine learning based approaches are not sensitive to this, and work with live recordings as well, offering a natural evolution of this project.

The code for this project is available on GitHub